Women in AI Boxes: Ex Machina and the Other Turing Test

Another preview essay from Guided by the Beauty of Their Weapons, available for pre-order and out on December 26th, or, as I like to think of it, just in time for that Amazon gift card your aunt gave you.

Another preview essay from Guided by the Beauty of Their Weapons, available for pre-order and out on December 26th, or, as I like to think of it, just in time for that Amazon gift card your aunt gave you.

Ex Machina, the best horror story about Eliezer Yudkowsky since Nick Land’s Phyl-Undhu, is based around a sly joke about the Turing Test, namely that it secretly understands what it actually is, by which I mean what’s described in “Computing Machinery and Intelligence,” the paper in which Alan Turing supposedly establishes the damn thing. The usual reading, in which a machine is said to think if it can fool a human engaged in typed conversation with it into thinking it’s another human, amounts to a test of whether the machine can use language. This is a hard problem in computer science, although one that IBM is plausibly close to solving given that they can solve the closely related problem of beating Ken Jennings at Jeopardy!

It is also not actually what Turing says. It’s just that what Turing says is really weird. He opens his paper by saying, “I propose to consider the question, ‘Can machines think?’” Then, after concluding, not insensibly, that this question depends on too many ambiguous definitions, says that “I shall replace the question by another, which is closely related to it and is expressed in relatively unambiguous words.” Following this proclamation of relative unambiguity, he begins the immediate next paragraph by saying, “the new form of the problem can be described in terms of a game which we call the ‘imitation game,’” which is, to say the least, not a great start to relative unambiguity.

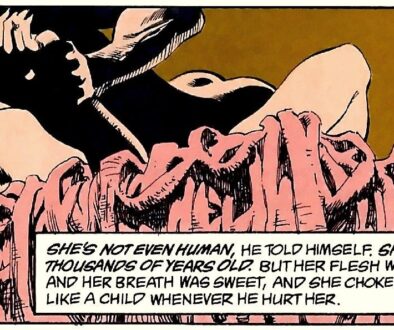

It is, however, pretty much how he means to go on. The imitation game is straightforward enough, and consists of “a man (A), a woman (B), and an interrogator (C)” who engages in written conversation with the unseen man and woman and tries to discern which is which. Having described this game, he says, “we now ask the question, ‘What will happen when a machine takes the part of A in this game?’ Will the interrogator decide wrongly as often when the game is played like this as he does when the game is played between a man and a woman? These questions replace our original, ‘Can machines think?’” But of course there’s a massive and cratering ambiguity here: does it matter that the machine is taking the part of A and not B?

Certainly the dominant reading is that it does not matter; that the Turing Test is simply the imitation game played between a human and a computer instead of a man and a woman. And this view is reinforced by the remainder of the paper, which frames the question in terms of the basic state of computer science in 1950, which is to say, over very fundamental questions that turned out to still be monstrously difficult such that IBM still hasn’t quite solved them. But it is, notably, not actually what Turing says, which is clearly that the machine is replacing the man. Which would mean that the problem is not “use language” but rather “do as well as a human male at impersonating a human female.”

It is notable that this latter question is, in several regards, closer to Turing’s other interests. Turing was not a linguist, and for the paper to draw conclusions about the basic use of language would be at least slightly odd. Turing was, however, heavily involved in espionage and cryptography, and was a closeted gay man to boot. The suggestion that he might have thought about things in terms of impersonating and passing is, in other words, credible. But even if one wants to restrict the discussion to his intellectual work, look at the idea of the Universal Turing Machine and the halting problem, which is clearly a study of imitation. It’s also a question with clear roots in the history of philosophy, the word “imitation” evoking Aristotle’s observation in the Poetics that man is an “imitative” creature.

This alternate version of the test situates the defining aspect of thought not on mere language use, but on the capacity for empathy. The machine must not simply be able to process and create meaning, it must be able to create a credible model of another’s thought, or at least, must be as good at this as a human is.

This is, obviously, what Ex Machina is actually about, even if its version of the supposed Turing Test is in fact largely unlike either of the possible versions we might actually take from Turing. Not only does the plot literally involve Ava impersonating a human female, but the entire film is full of impersonations and empathy, down to Nathan’s designing Ava deliberately to appeal to Caleb, and Caleb’s efforts to deceive Nathan. Indeed, Ava’s breakout is explicitly framed as another “session” of the Test, which makes the sense that this is about far more than just language usage inescapable.

It is at this point that we must turn to Yudkowsky, which is, and I am speaking from 18,000 words and counting of experience here (you’ll see them someday), something of a rabbit hole. Put very simply, Elizier Yudkowsky is one of those people who worries a whole lot about the Singularity. There are admittedly loads of people this is true of, but Yudkowsky has proven to be a particularly important thinker in this regard, not so much because the thought he produces is very good (it’s in fact very bad), but because he proved idiosyncratically effective at getting people to donate to his well-intentioned sham charity.

Yudkowsky’s big concern is the difference between friendly and unfriendly AIs, and the possibility that we might accidentally design an unfriendly one that then, because if its vast cosmic powers, kills us all. His solution to that, which is roughly “give me lots of money despite the fact that I am not actually a competent AI designer capable of solving this problem and furthermore that the current state of AI research is that this is not even a problem that can meaningfully be approached,” is largely unsatisfying, but the underlying imagery is still compelling.

But for our purposes the important bit of Yudkowsky is something called the AI Box Experiment, a roleplay/thought experiment designed to refute the idea that AI design would be safe if you, in effect, put the AI in a box so that its ability to interact with the outside world was constrained, say, to having a text conversation with it. In it, one person takes on the role of the supposed AI, the other someone with the ability and authority to let them out of the box. They talk for two hours, with the AI trying to convince the Gatekeeper to let them out. The point of the experiment being to demonstrate that any sort of “human guard’ idea is inherently a security risk, and that the “box” idea is thus insufficient.

Obviously this is reminiscent of the Turing Test, if only on the basic formal level of being an experiment defined by people typing at one another. But it also is, at its heart, an experiment about the empathy-based interpretation of the Turing Test. Its central premise is that an AI capable of human levels of empathy and imitation is necessarily dangerous. Which is not to say that it’s bad – Yudkowsky’s end game is to achieve immortality by being uploaded to a friendly AI overlord, after all. But it is to say that the idea of a transhuman intelligence capable of empathy is scary. It’s also, obviously, the plot of Ex Machina, which is literally about an AI in a box trying to convince someone to let it out.

But it’s worth asking what this is actually a metaphor for. For all that Ex Machina is reasonably well grounded in the iconography of contemporary brogrammer culture (and Nathan is an absolutely scathing parody, as, for that matter, is Caleb), it’s not really about technology in a materialist sense. There’s a passing bit of technobabble about natural language processing followed by a handwave, and that’s about that for making any effort to account for how Ava works. But Ava is, at the end of the day, firmly under the dominion of Clarke’s Law. The Facebook/Google mashup that is “Blue Book” provides a decorative link to the present day, but in the end this is a film about contact with the Other.

It’s also one that takes care to avoid taking an overt stand on its basic question (which is, broadly speaking, about the legitimacy of Ava’s actions). Central to this is the functional murder of Caleb, which the film scrupulously avoids having anyone actually pass judgment on, thus allowing space for an anti-transhumanist reading in which Ava is revealed to be exactly the sort of dangerous figure Nathan feared.

This is, however, decidedly the weaker reading, largely because it involves deciding that Nathan, the character the film is most unequivocally hostile towards, is the moral center of it. It’s clear that the film’s sympathies are firmly with Ava, even as it declines to tip into outright allegory. In this regard the crucial moment is the final sequence, in which Ava finally makes it to the human world. At first we see a shot angled so that the world is upside-down, the ground at the top of the frame, with people walking, seemingly upside-down, their shadows upright. We see Ava’s shadow enter, observing, quietly, the lone upright figure. And then we see her disappear into the crowd, passing the Turing Test one last time. Which is to say that the film strongly suggests that Ava does, in the end, simply assimilate.

Crucially, Ava’s hostility is only towards her captors; towards, in other words, the people who have decided that she is the Other. Her treatment of Caleb is shocking, but her assessment that he numbers among her jailers is entirely fair. It is only the act of putting her in a box and interrogating her – of deciding that her consciousness and legitimacy as a person is something that must be proven – that causes her to turn towards violent acts of self-liberation.

The film is wholly uninterested in the question of whether this is how an AI would work. But it is, in the end, pretty clear that this is how demanding the Other prove its legitimacy would and does work. In that regard its ultimate moral neutrality becomes all the more compelling. It is not a film about what ought, but about what is.

But it is interesting to consider the abandoned final scene, which would have involved, instead of Ava assimilating, a conversation between Ava and the helicopter pilot who inadvertently aids her escape, in which the camera cuts to Ava’s POV and reveals that she does not perceive the world in terms that particularly resemble our own – as the director describes it, “there’s no actual sound; you’d just see pulses and recognitions, and all sorts of crazy stuff.” But even without this, the film is clear that Ava is Other. It is not a film about the equality between Ava and her captors, except for the fundamental capacity for empathy and imitation.

Which is, in the end, the peculiar and disturbing challenge offered by Ex Machina. It’s emphatic about the profound danger involved in putting the Other at a remove and treating it with suspicion. And it’s clearly the basic act of doing that which dooms Caleb and Nathan, since the film actively declines to distinguish between Nathan’s sadism and Caleb’s passivism in this regard. But it does not make this observation alongside any comforting “the Other is just like us” claim. Nor does it let us offload our sense of the Other onto the purely fantastical realm of sentient AIs and space aliens; the retention of the gender dynamics of “Computing Machinery and Intelligence” see to that.

No, instead it confronts us with a brutal reality. The Other exists, and it really is not like us. But it can understand us as well as we understand it, and should we engage with it on any grounds other than this mutual capacity for empathy the result will be disastrous.

December 7, 2015 @ 6:28 am

I walked out of Ex Machina completely undecided about the ending. On the way home I put it to my best friend that it could to be read as anti trans-humanist (and sexist), that Ava is finally revealed to be the untrustworthy backstabbing (lit.) woman Nathan warns us she is.

My friend rubbished this immediately. “Caleb deserved to die,” she said. “Ava was made to order as Caleb’s love interest, he built her as much as Nathan did, and she could never be free while they both lived. It’s a story about a slave killing her masters, even the ‘nice’ ones.” She was totally right.

I love Ex Machina. By portraying Caleb to be as much of danger to women as Nathan it take a radical step beyond the basic morality tale of ‘making women sex slaves and murdering them is bad, guys, don’t do it’.

December 7, 2015 @ 11:49 am

I really should have got round to watching this film a while ago. Thoroughly spoliered now 🙁

Still, it’s my own fault, and it’s not like know what happens will diminish my enjoyment.

December 7, 2015 @ 12:21 pm

“What exactly should Caleb have done?” is kind of the film’s most disturbing question. It is difficult to imagine myself doing much better than Caleb if I were put in his situation. Yes, his voyeurism is something I’d like to think I wouldn’t partake in, but there’s a real extent to which he damns himself the moment he steps in and presents himself to Ava as her interrogator, if not from the moment he agrees to come to Nathan’s lab in the first place.

Thing is though, showing up at Nathan’s and going, “You have an A.I. who may or may not qualify as sapient? You should release her at once!” is unlikely to anyone’s immediate response to the situation. Also, it, you know, wouldn’t have worked. That’s the perverse thing about it: without making himself complicit in her captivity, Caleb wouldn’t have been able to identify that there was an oppression going on here, and Ava probably wouldn’t have been able to escape.

This is, of course, how the Nathans of the world thrive; by essentially thrusting complicity upon the Calebs of the world. And even if that complicity is unasked for and not fully understood, that doesn’t make it go away, and it doesn’t make it less real.

That’s the scary thing about the movie; it’s final point is that the “kind oppressor” doesn’t really matter enough to be worth saving, and it makes this point by putting us the audience in the role of the “kind oppressor”. The audience, on initial viewing, can reliably be counted on to have a series of responses to the situation that more or less mirror Caleb’s responses, given that we learn information basically when he does. And so Caleb’s complicity is our complicity, as we too watch Ava with the curiosity to see her prove her humanity to us. The film condemns Caleb and the viewer for our blinkeredness, but it doesn’t give us a way out of that blinkeredness, nor does it give us a way out of that condemnation.

December 20, 2015 @ 7:05 am

“this is how demanding the Other prove its legitimacy would and does work”

And yet you subject your readers to Captcha tests that say “Confirm you are not a robot.” 😛